The ACM Transactions on the Web journal just accepted our article “Language-Agnostic Modeling of Source Reliability on Wikipedia”. The main goal of this project was to generate and assess actionable metrics for source controversiality in Wikipedia. To guarantee universality (i.e. applicability to all Wikipedia language editions), knowledge equity and avoid dependence on the specifics of a given language, this joint work with Jacopo D’Ignazi (Universitat Pompeu Fabra / ISI Foundation), Andreas Kaltenbrunner (Universitat Oberta de Catalunya / ISI Foundation / Universitat Pompeu Fabra), Yelena Mejova (ISI Foundation), Michele Tizzani (ISI Foundation), Kyriaki Kalimeri (ISI Foundation) and Mariano Beiró (University of San Andres / CONICET) relies on language-agnostic approaches using mainly data from editing activity. Our findings have been instrumental for the Wikimedia Foundation project on Reference Risk.

Abstract:

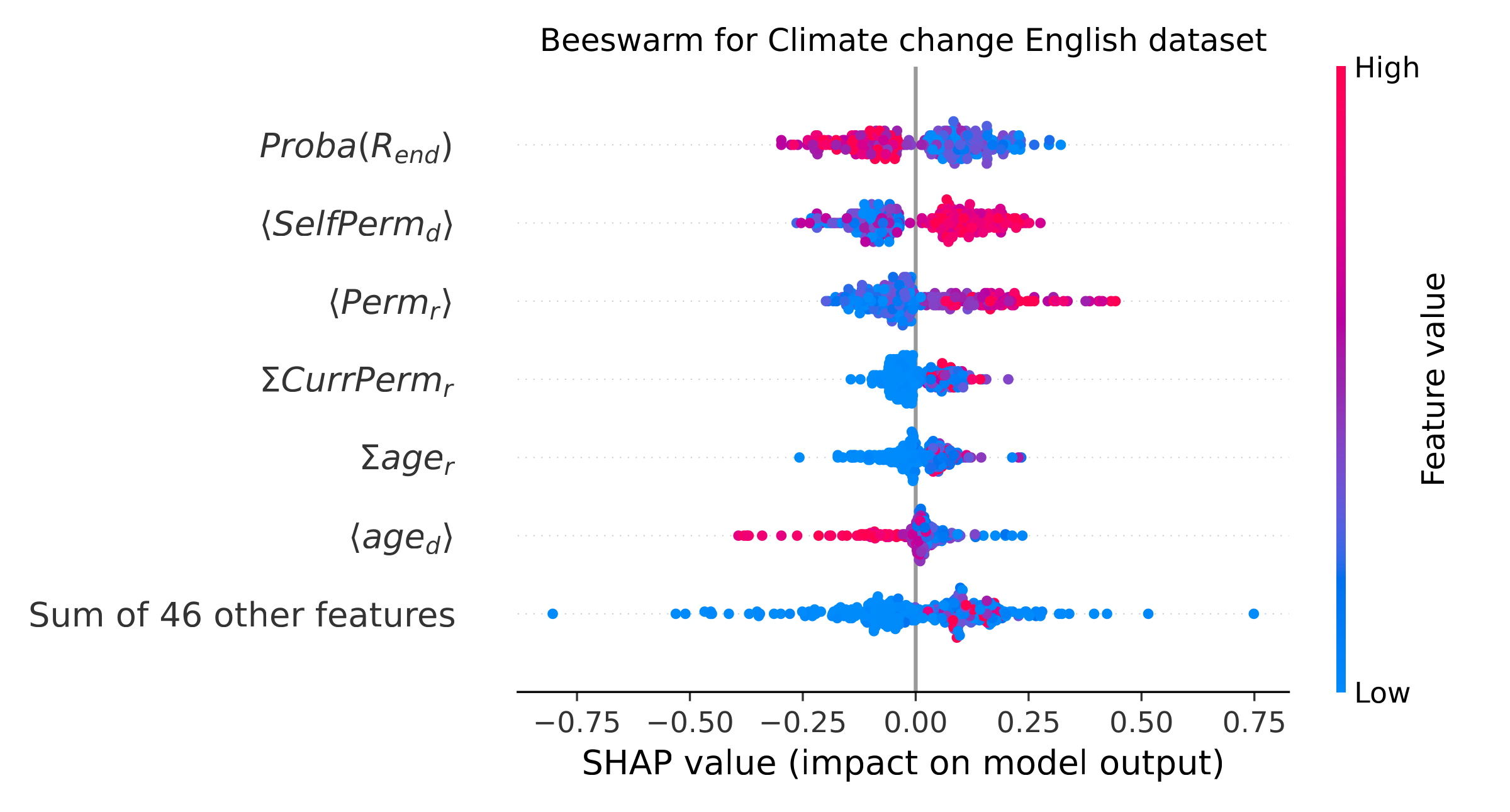

Over the last few years, verifying the credibility of information sources has become a fundamental need to combat disinformation. Here, we present a language-agnostic model designed to assess the reliability of web domains as sources in references across multiple language editions of Wikipedia. Utilizing editing activity data, the model evaluates domain reliability within different articles of varying controversiality, such as Climate Change, COVID-19, History, Media, and Biology topics. Crafting features that express domain usage across articles, the model effectively predicts domain reliability, achieving an F1 Macro score of approximately 0.80 for English and other high-resource languages. For mid-resource languages, we achieve 0.65, while the performance of low-resource languages varies. In all cases, the time the domain remains present in the articles (which we dub as permanence) is one of the most predictive features. We highlight the challenge of maintaining consistent model performance across languages of varying resource levels and demonstrate that adapting models from higher-resource languages can improve performance. We believe these findings can assist Wikipedia editors in their ongoing efforts to verify citations and may offer useful insights for other user-generated content communities.